Optimise crawl budget to improve website performance |

|

by David Dwyer on 09/08/2018 |

How optimising your crawl budget will improve the performance of your websiteHopefully, having launched your website, you’re doing your homework and checking its Google web stats regularly. There’s so much there to help you earn a return on your investment.

Chances are though, with so much information at your fingertips, and so many demands on your time, you haven’t got much further than looking at your audience split, their favourite landing page, and the overall site visit trend over the last week, month or quarter.

Am I right?

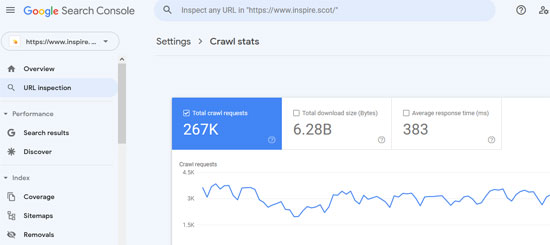

I’m willing to bet you haven’t ever clicked on crawl stats in the Google Search Console?

Ed: Just to bring you up to speed before you read any further:

So you may be missing a trick.

By way of explanation let’s take an analogy widely attributed to Albert Einstein, “Compound interest is the eighth wonder of the world. He who understands it, earns it ... he who doesn't ... pays it.”

It’s an analogy that works for search engine optimisation (SEO) as well. If you take a little time to understand what will help you earn a greater return on your investment in your website (or simply ask us), then there’s a whole lot more to search engine optimisation than proper tags, relevant keywords, great design elements and a steady stream of high-quality content.

The other analogy is like that of leap frog, what do you need to do even minimal to enable you to leap over the next frog ie move beyond the Search Engine Ranking Position (SERP) above you.

As Google Premier Partners, we understand how to compound the effect of these measures with the more subtle ‘mysteries’ that Google stats can reveal.

However let me be clear this is no dark art.

Helping clients with their SEO is one of our most valued services.

Your crawl budget is a good example.

What is your “crawl budget”?

The best explanation is this is from Google themselves, however this summary from Search Engine Land is slightly more approachable!

“Here is a short summary of what was published, but I recommend reading the full post.

Optimising your investment

All search engines work by relying on spiders to gather information on your website: the best known are Googlebot and Bingbot (Microsoft’s equivalent).

Googlebot discovers new pages and adds them to the Google Index. Your site’s crawl budget reflects the number of times Googlebot, (or another spider), crawls your website in any given period.

There are three key reasons this might matter to you:

The smarter you manage your crawl budget, therefore, the faster all this will happen – with Google, Bing, Yahoo - whoever.

Don’t be left hamstrung by Open Source platforms

Some templates for Open Source platforms rely on multiple plug-ins that have been updated countless times, leaving the code on your pages confused, impaired and far longer than it needs to be. That can upset the spiders! Some of the things you can do to help improve your crawl budget include:

Efficient crawling of your website will help with its indexing in Google Search and help improve your SEO performance.

For bigger sites, or those that auto-generate pages based on URL parameters, prioritising how much resource your server allocates to facilitate crawling is also important.

The thing to remember is, for you at least, every web page has a value, but for spiders, not every web page is of equal value.

You can influence how much they rate your page.

Inspire work proactively to deliver a return on your investment

So, do you simply plan to spend online, or will you ‘compound’ your investment to create a return?

By improving your page performance, you (or we) can deliver a better user experience for site visitors, with faster page responses, cleaner code and improved traffic and/or engagements (bookings, sales, enquiries etc). It’s a win-win.

This might make your head hurt, just like compound interest, but if you take time to understand it, you’ll see your site performance improve.

While other web developers might be happy just to publish your site and then move on; we take a view that that is when the real hard starts in that we then work actively with all our clients to deliver value from their sites.

Wherever they are based (and we have hundreds of clients, across the UK and internationally) we will discuss how elements like a crawl budget review can help you respond to the evolving web and gain more value online. We do this at least twice every year – but you can ask us anytime. You might also like to read about our process which utilises PRINCE2, the gold standard of Project Management. We believe this makes us stand out from the crowd

Call now on (+44)1738 700 006. |

|

Customer Service, Google Trends, Inspire Web Development, Inspire Web Services, Internet of Things, Search Engine Optimisation, The Evolving Web, Web Consultancy, Web Design, Website Support

|